- New

-

Topics

- All Categories

- Metaphysics and Epistemology

- Value Theory

- Science, Logic, and Mathematics

- Science, Logic, and Mathematics

- Logic and Philosophy of Logic

- Philosophy of Biology

- Philosophy of Cognitive Science

- Philosophy of Computing and Information

- Philosophy of Mathematics

- Philosophy of Physical Science

- Philosophy of Social Science

- Philosophy of Probability

- General Philosophy of Science

- Philosophy of Science, Misc

- History of Western Philosophy

- Philosophical Traditions

- Philosophy, Misc

- Other Academic Areas

- Journals

- Submit material

- More

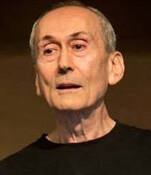

Works by Stevan Harnad

93 found

Order:

- The symbol grounding problem.Stevan Harnad - 1990 - Physica D 42:335-346.There has been much discussion recently about the scope and limits of purely symbolic models of the mind and about the proper role of connectionism in cognitive modeling. This paper describes the symbol grounding problem : How can the semantic interpretation of a formal symbol system be made intrinsic to the system, rather than just parasitic on the meanings in our heads? How can the meanings of the meaningless symbol tokens, manipulated solely on the basis of their shapes, be grounded (...)

- Consciousness: An afterthought.Stevan Harnad - 1982 - Cognition and Brain Theory 5:29-47.There are many possible approaches to the mind/brain problem. One of the most prominent, and perhaps the most practical, is to ignore it.

- 4 Years of Animal Sentience.Walter Veit & Stevan Harnad - forthcoming - Psychology Today.No categories

- Categorical perception.Stevan Harnad - 2003 - In L. Nadel (ed.), Encyclopedia of Cognitive Science. Nature Publishing Group. pp. 67--4.

- Connecting object to symbol in modeling cognition.Stevan Harnad - 1992 - In A. Clark & Ronald Lutz (eds.), Connectionism in Context. Springer Verlag. pp. 75--90.Connectionism and computationalism are currently vying for hegemony in cognitive modeling. At first glance the opposition seems incoherent, because connectionism is itself computational, but the form of computationalism that has been the prime candidate for encoding the "language of thought" has been symbolic computationalism (Dietrich 1990, Fodor 1975, Harnad 1990c; Newell 1980; Pylyshyn 1984), whereas connectionism is nonsymbolic (Fodor & Pylyshyn 1988, or, as some have hopefully dubbed it, "subsymbolic" Smolensky 1988). This paper will examine what is and is not (...)

- Psychophysical and cognitive aspects of categorical perception:A critical overview.Stevan Harnad - unknownThere are many entry points into the problem of categorization. Two particularly important ones are the so-called top-down and bottom-up approaches. Top-down approaches such as artificial intelligence begin with the symbolic names and descriptions for some categories already given; computer programs are written to manipulate the symbols. Cognitive modeling involves the further assumption that such symbol-interactions resemble the way our brains do categorization. An explicit expectation of the top-down approach is that it will eventually join with the bottom-up approach, which (...)

- Minds, machines and Searle.Stevan Harnad - 1989 - Journal of Theoretical and Experimental Artificial Intelligence 1:5-25.Searle's celebrated Chinese Room Argument has shaken the foundations of Artificial Intelligence. Many refutations have been attempted, but none seem convincing. This paper is an attempt to sort out explicitly the assumptions and the logical, methodological and empirical points of disagreement. Searle is shown to have underestimated some features of computer modeling, but the heart of the issue turns out to be an empirical question about the scope and limits of the purely symbolic (computational) model of the mind. Nonsymbolic modeling (...)

- Minds, machines and Searle.Stevan Harnad - 1989 - Journal of Experimental and Theoretical Artificial Intelligence 1 (4):5-25.Searle's celebrated Chinese Room Argument has shaken the foundations of Artificial Intelligence. Many refutations have been attempted, but none seem convincing. This paper is an attempt to sort out explicitly the assumptions and the logical, methodological and empirical points of disagreement. Searle is shown to have underestimated some features of computer modeling, but the heart of the issue turns out to be an empirical question about the scope and limits of the purely symbolic model of the mind. Nonsymbolic modeling turns (...)

- To Cognize is to Categorize: Cognition is Categorization.Stevan Harnad - 2005 - In C. Lefebvre & H. Cohen (eds.), Handbook of Categorization. Elsevier.2. Invariant Sensorimotor Features ("Affordances"). To say this is not to declare oneself a Gibsonian, whatever that means. It is merely to point out that what a sensorimotor system can do is determined by what can be extracted from its motor interactions with its sensory input. If you lack sonar sensors, then your sensorimotor system cannot do what a bat's can do, at least not without the help of instruments. Light stimulation affords color vision for those of us with the (...)

- Virtual symposium on virtual mind.Patrick Hayes, Stevan Harnad, Donald Perlis & Ned Block - 1992 - Minds and Machines 2 (3):217-238.When certain formal symbol systems (e.g., computer programs) are implemented as dynamic physical symbol systems (e.g., when they are run on a computer) their activity can be interpreted at higher levels (e.g., binary code can be interpreted as LISP, LISP code can be interpreted as English, and English can be interpreted as a meaningful conversation). These higher levels of interpretability are called "virtual" systems. If such a virtual system is interpretable as if it had a mind, is such a "virtual (...)

- Why and how we are not zombies.Stevan Harnad - 1994 - Journal of Consciousness Studies 1 (2):164-67.A robot that is functionally indistinguishable from us may or may not be a mindless Zombie. There will never be any way to know, yet its functional principles will be as close as we can ever get to explaining the mind.

- Minds, machines and Turing: The indistinguishability of indistinguishables.Stevan Harnad - 2000 - Journal of Logic, Language and Information 9 (4):425-445.Turing's celebrated 1950 paper proposes a very general methodological criterion for modelling mental function: total functional equivalence and indistinguishability. His criterion gives rise to a hierarchy of Turing Tests, from subtotal ("toy") fragments of our functions (t1), to total symbolic (pen-pal) function (T2 -- the standard Turing Test), to total external sensorimotor (robotic) function (T3), to total internal microfunction (T4), to total indistinguishability in every empirically discernible respect (T5). This is a "reverse-engineering" hierarchy of (decreasing) empirical underdetermination of the theory (...)

- Can a machine be conscious? How?Stevan Harnad - 2003 - Journal of Consciousness Studies 10 (4-5):67-75.A "machine" is any causal physical system, hence we are machines, hence machines can be conscious. The question is: which kinds of machines can be conscious? Chances are that robots that can pass the Turing Test -- completely indistinguishable from us in their behavioral capacities -- can be conscious (i.e. feel), but we can never be sure (because of the "other-minds" problem). And we can never know HOW they have minds, because of the "mind/body" problem. We can only know how (...)

- Computation is just interpretable symbol manipulation; cognition isn't.Stevan Harnad - 1994 - Minds and Machines 4 (4):379-90.Computation is interpretable symbol manipulation. Symbols are objects that are manipulated on the basis of rules operating only on theirshapes, which are arbitrary in relation to what they can be interpreted as meaning. Even if one accepts the Church/Turing Thesis that computation is unique, universal and very near omnipotent, not everything is a computer, because not everything can be given a systematic interpretation; and certainly everything can''t be givenevery systematic interpretation. But even after computers and computation have been successfully distinguished (...)

- Distributed processes, distributed cognizers, and collaborative cognition.Stevan Harnad - 2005 - Pragmatics and Cognition 13 (3):501-514.Cognition is thinking; it feels like something to think, and only those who can feel can think. There are also things that thinkers can do. We know neither how thinkers can think nor how they are able to do what they can do. We are waiting for cognitive science to discover how. Cognitive science does this by testing hypotheses about what processes can generate what doing.This is called the Turing Test. It cannot test whether a process can generate feeling, hence (...)

- Symbol grounding and the symbolic theft hypothesis.Angelo Cangelosi, Alberto Greco & Stevan Harnad - 2002 - In A. Cangelosi & D. Parisi (eds.), Simulating the Evolution of Language. Springer Verlag. pp. 191--210.Scholars studying the origins and evolution of language are also interested in the general issue of the evolution of cognition. Language is not an isolated capability of the individual, but has intrinsic relationships with many other behavioral, cognitive, and social abilities. By understanding the mechanisms underlying the evolution of linguistic abilities, it is possible to understand the evolution of cognitive abilities. Cognitivism, one of the current approaches in psychology and cognitive science, proposes that symbol systems capture mental phenomena, and attributes (...)

- Symbol‐grounding Problem.Stevan Harnad - 2003 - In L. Nadel (ed.), Encyclopedia of Cognitive Science. Nature Publishing Group.

- Turing indistinguishability and the blind watchmaker.Stevan Harnad - 2002 - In James H. Fetzer (ed.), Consciousness Evolving. John Benjamins. pp. 3-18.Many special problems crop up when evolutionary theory turns, quite naturally, to the question of the adaptive value and causal role of consciousness in human and nonhuman organisms. One problem is that -- unless we are to be dualists, treating it as an independent nonphysical force -- consciousness could not have had an independent adaptive function of its own, over and above whatever behavioral and physiological functions it "supervenes" on, because evolution is completely blind to the difference between a conscious (...)

- Symbol grounding and the origin of language.Stevan Harnad - 2002 - In Matthias Scheutz (ed.), Computationalism: New Directions. MIT Press.What language allows us to do is to "steal" categories quickly and effortlessly through hearsay instead of having to earn them the hard way, through risky and time-consuming sensorimotor "toil" (trial-and-error learning, guided by corrective feedback from the consequences of miscategorisation). To make such linguistic "theft" possible, however, some, at least, of the denoting symbols of language must first be grounded in categories that have been earned through sensorimotor toil (or else in categories that have already been "prepared" for us (...)

- Validating research performance metrics against peer rankings.Stevan Harnad - 2008 - Ethics in Science and Environmental Politics 8 (1):103-107.

- The Latent Structure of Dictionaries.Philippe Vincent-Lamarre, Alexandre Blondin Massé, Marcos Lopes, Mélanie Lord, Odile Marcotte & Stevan Harnad - 2016 - Topics in Cognitive Science 8 (3):625-659.How many words—and which ones—are sufficient to define all other words? When dictionaries are analyzed as directed graphs with links from defining words to defined words, they reveal a latent structure. Recursively removing all words that are reachable by definition but that do not define any further words reduces the dictionary to a Kernel of about 10% of its size. This is still not the smallest number of words that can define all the rest. About 75% of the Kernel turns (...)

- Categorical Perception and the Evolution of Supervised Learning in Neural Nets.Stevan Harnad & SJ Hanson - unknownSome of the features of animal and human categorical perception (CP) for color, pitch and speech are exhibited by neural net simulations of CP with one-dimensional inputs: When a backprop net is trained to discriminate and then categorize a set of stimuli, the second task is accomplished by "warping" the similarity space (compressing within-category distances and expanding between-category distances). This natural side-effect also occurs in humans and animals. Such CP categories, consisting of named, bounded regions of similarity space, may be (...)

- Learned Inquiry and the Net: The Role of Peer Review, Peer Commentary and Copyright.Stevan Harnad - unknownPeer Review and Copyright each have a double role: Formal refereeing protects (R1) the author from publishing and (R2) the reader from reading papers that are not of sufficient quality. Copyright protects the author from (C1) theft of text and (C2) theft of authorship. It has been suggested that in the electronic medium we can dispense with peer review, "publish" everything, and let browsing and commentary do the quality control. It has also been suggested that special safeguards and laws may (...)

- Creative disagreement.Stevan Harnad - unknownDo scientists agree? It is not only unrealistic to suppose that they do, but probably just as unrealistic to think that they ought to. Agreement is for what is already established scientific history. The current and vital ongoing aspect of science consists of an active and often heated interaction of data, ideas and minds, in a process one might call "creative disagreement." The "scientific method" is largely derived from a reconstruction based on selective hindsight. What actually goes on has much (...)

- Grounding symbols in the analog world with neural nets a hybrid model.Stevan Harnad - unknown1.1 The predominant approach to cognitive modeling is still what has come to be called "computationalism" (Dietrich 1990, Harnad 1990b), the hypothesis that cognition is computation. The more recent rival approach is "connectionism" (Hanson & Burr 1990, McClelland & Rumelhart 1986), the hypothesis that cognition is a dynamic pattern of connections and activations in a "neural net." Are computationalism and connectionism really deeply different from one another, and if so, should they compete for cognitive hegemony, or should they collaborate? These (...)

- Correlation vs. causality: How/why the mind-body problem is hard.Stevan Harnad - 2000 - Journal of Consciousness Studies 7 (4):54-61.The Mind/Body Problem is about causation not correlation. And its solution will require a mechanism in which the mental component somehow manages to play a causal role of its own, rather than just supervening superflously on other, nonmental components that look, for all the world, as if they can do the full causal job perfectly well without it. Correlations confirm that M does indeed "supervene" on B, but causality is needed to show how/why M is not supererogatory; and that's the (...)

- Scholarly skywriting and the prepublicationcontinuum of scientific inquiry.Stevan Harnad - unknownWilliam Gardner's proposal to establish a searchable, retrievable electronic archive is fine, as far as it goes. The potential role of electronic networks in scientific publication, however, goes far beyond providing searchable electronic archives for electronic journals. The whole process of scholarly communication is currently undergoing a revolution comparable to the one occasioned by the invention of printing. On the brink of intellectual perestroika is that vast PREPUBLICATION phase of scientific inquiry in which ideas and findings are discussed informally with (...)

- Sleep and Dreaming: Scientific Advances and Reconsiderations.Edward F. Pace-Schott, Mark Solms, Mark Blagrove & Stevan Harnad (eds.) - 2003 - Cambridge University Press.Printbegrænsninger: Der kan printes 10 sider ad gangen og max. 40 sider pr. session.

- Experimental Analysis of Naming Behavior Cannot Explain Naming Capacity.Stevan Harnad - unknownThe experimental analysis of naming behavior can tell us exactly the kinds of things Horne & Lowe (H & L) report here: (1) the conditions under which people and animals succeed or fail in naming things and (2) the conditions under which bidirectional associations are formed between inputs (objects, pictures of objects, seen or heard names of objects) and outputs (spoken names of objects, multimodal operations on objects). The "stimulus equivalence" that H & L single out is really just the (...)No categories

- Grounding Symbolic Capacity in Robotic Capacity.Stevan Harnad - unknownAccording to "computationalism" (Newell, 1980; Pylyshyn 1984; Dietrich 1990), mental states are computational states, so if one wishes to build a mind, one is actually looking for the right program to run on a digital computer. A computer program is a semantically interpretable formal symbol system consisting of rules for manipulating symbols on the basis of their shapes, which are arbitrary in relation to what they can be systematically interpreted as meaning. According to computationalism, every physical implementation of the right (...)

- Learned Categorical Perception in Neural Nets: Implications for Symbol Grounding.Stevan Harnad & Stephen J. Hanson - unknownAfter people learn to sort objects into categories they see them differently. Members of the same category look more alike and members of different categories look more different. This phenomenon of within-category compression and between-category separation in similarity space is called categorical perception (CP). It is exhibited by human subjects, animals and neural net models. In backpropagation nets trained first to auto-associate 12 stimuli varying along a onedimensional continuum and then to sort them into 3 categories, CP arises as a (...)

- Rational Disagreement in Peer Review. [REVIEW]Stevan Harnad - 1985 - Science, Technology and Human Values 10 (3):55-62.No categories

- Other bodies, other minds: A machine incarnation of an old philosophical problem. [REVIEW]Stevan Harnad - 1991 - Minds and Machines 1 (1):43-54.Explaining the mind by building machines with minds runs into the other-minds problem: How can we tell whether any body other than our own has a mind when the only way to know is by being the other body? In practice we all use some form of Turing Test: If it can do everything a body with a mind can do such that we can't tell them apart, we have no basis for doubting it has a mind. But what is (...)

- A subversive proposal.Stevan Harnad - 1995 - In A. Okerson & J. O'Donnell (eds.), Scholarly Journals at the Crossroads: A Subversive Proposal for Electronic Publishing.

- BBS Valedictory Editorial.Stevan Harnad - 2003 - Behavioral and Brain Sciences 26 (1):1-2.No categories

- Metaphor and Mental Duality.Stevan Harnad - 1982 - In T. Simon & R. Scholes (ed.), Language, Mind, And Brain. Hillsdale NJ: Erlbaum. pp. 189-211.I am going to attempt to argue, given certain premises, there are reasons, not only empirical, but also logical, for expecting a certain division of labor in the processing of information by the human brain. This division of labor consists specifically of a functional bifurcation into what may be called, to a first approximation, "verbal" and "nonverbal" modes of information- processing. That this dichotomy is not quite satisfactory, however, will be one of the principal conclusions of this chapter, for I (...)

- Preface.Stevan Harnad - 1994 - Minds and Machines 4 (4):377-378.

- The Timing of a Conscious Decision: From Ear to Mouth.Stevan Harnad - unknownLibet, Gleason, Wright, & Pearl (1983) asked participants to report the moment at which they freely decided to initiate a pre-specified movement, based on the position of a red marker on a clock. Using event-related potentials (ERPs), Libet found that the subjective feeling of deciding to perform a voluntary action came after the onset of the motor “readiness potential,” RP). This counterintuitive conclusion poses a challenge for the philosophical notion of free will. Faced with these findings, Libet (1985) proposed that (...)

- Free at last: The future of Peer reviewed journals.Stevan Harnad - unknownI have a feeling that when Posterity looks back at the last decade of the 2nd A.D. millennium of scholarly and scientific research on our planet, it may chuckle at us. It is not the pace of our scholarly and scientific research that will look risible, nor the tempo of technological change. On the contrary, the astonishing speed and scale of both will make the real anomaly look all the more striking.

- Interactive Cognition: Exploring the Potential of Electronic Quote/Commenting.Stevan Harnad - unknownHuman cognition is not an island unto itself. As a species, we are not Leibnizian Monads independently engaging in clear, Cartesian thinking. Our minds interact. That's surely why our species has language. And that interactivity probably constrains both what and how we think.

- Creativity : method or magic?Stevan Harnad - 2007 - In Henri Cohen & Brigitte Stemmer (eds.), Consciousness and Cognition: Fragments of Mind and Brain. Elxevier Academic Press.Creativity may be a trait, a state or just a process defined by its products. It can be contrasted with certain cognitive activities that are not ordinarily creative, such as problem solving, deduction, induction, learning, imitation, trial and error, heuristics and "abduction," however, all of these can be done creatively too. There are four kinds of theories, attributing creativity respectively to (1) method, (2) "memory" (innate structure), (3) magic or (4) mutation. These theories variously emphasize the role of an unconscious (...)

- Turing on reverse-engineering the mind.Stevan Harnad - 1999 - Journal of Logic, Language, and Information.

- Valedictory editorial.Stevan Harnad - 2003 - Behavioral and Brain Sciences 26 (1):1-1.

- Waking OA's “Slumbering Giant”: The University's Mandate To Mandate Open Access.Stevan Harnad - unknownSUMMARY: Universities (the universal research-providers) as well as research funders (public and private) are beginning to make it part of their mandates to ensure not only that researchers conduct and publish peer-reviewed research (“publish or perish”), but that they also make it available online, free for all. This is called Open Access (OA), and it maximizes the uptake, impact and progress of research by making it accessible to all potential users worldwide, not just those whose universities can afford to subscribe (...)

- Minds, machines, and Searle 2: What's right and wrong about the chinese room argument.Stevan Harnad - 2003 - In John M. Preston & John Mark Bishop (eds.), Views Into the Chinese Room: New Essays on Searle and Artificial Intelligence. Oxford University Press.When in 1979 Zenon Pylyshyn, associate editor of Behavioral and Brain Sciences (BBS, a peer commentary journal which I edit) informed me that he had secured a paper by John Searle with the unprepossessing title of [XXXX], I cannot say that I was especially impressed; nor did a quick reading of the brief manuscript -- which seemed to be yet another tedious "Granny Objection"[1] about why/how we are not computers -- do anything to upgrade that impression.

- Responses to 'computationalism'.1Imre Balogh, Brian Beakley, Paul Churchland, Michael Gorman, Stevan Harnad, David Mertz, H. H. Pattee, William Ramsey, John Ringen, Georg Schwarz, Brian Slator, Alan Strudler & Charles Wallis - 1990 - Social Epistemology 4 (2):155 – 199.

- Evidence of hypertext in the scholarly archive.Leslie Carr & Stevan Harnad - unknownDalgaard's recent article [3] argues that the part of the Web that constitutes the scientific literature is composed of increasingly linked archives. He describes the move in the online communications of the scientific community towards an expanding zone of secondorder textuality, of an evolving network of texts commenting on, citing, classifying, abstracting, listing and revising other texts. In this respect, archives are becoming a network of texts rather than simply a classified collection of texts. He emphasizes the definition of hypertext (...)

- Controversies in Neuroscience III Signal transduction in the retina.Paul Cordo & Stevan Harnad - 1995 - Behavioral and Brain Sciences 18 (3):401-401.

- Broca's area and language evolution.Stevan Harnad - 2005 - Behavioral and Brain Sciences 28 (4):1-5.: Grodzinsky associates Broca's area with three kinds of deficit, relating to articulation, comprehension (involving trace deletion), and production (involving "tree priming"). Could these be special cases of one deficit? Evidence from research on language evolution suggests that they may all involve syllable structure or those aspects of syntax that evolved through exploiting the neural mechanisms underlying syllable structure.

- Codes, communication and cognition.Stevan Harnad - 2019 - Behavioral and Brain Sciences 42.Brette criticizes the notion of neural coding because it seems to entail that neural signals need to “decoded” by or for some receiver in the head. If that were so, then neural coding would indeed be homuncular, requiring an entity to decipher the code. But I think Brette's plea to think instead in terms of complex, interactive causal throughput is preaching to the converted. Turing has already shown the way. In any case, the metaphor of neural coding has little to (...)No categories

| 1 — 50 / 93 |